Linux – it’s like a shark or an iceberg. Most of it’s below the surface but it’s moving fast and you really ought to know about it.

The majority of people, if they’re aware of it at all, probably think of Linux as the non-commercial alternative to Windows or Mac favoured by people who use computers less to get things done than because they actually enjoy it. Which is a shame in a way, as it gives a wholly wrong impression of its significance. Linux is so much more than an operating system for nerds. Indeed you probably use it yourself, every day. Each time you visit a website the chances are good that you’re talking to a computer running Linux. Smart devices in your home like satellite boxes and DVRs, even TVs now, use Linux. If you have an Android phone, that’s based on Linux. Governments are adopting it, and it is far and away the favourite operating system for the world’s most powerful supercomputers.

So it should be surprising that on the workplace desktop – still the biggest, most visible, and most lucrative computer market sector – it runs a very distant third. How come?

Counterintuitively, because it’s free. After all the PC is not ruled by Windows and Mac OS because they’re cheap. Rather it’s because people can make money out of them. Huge ecosystems of supporting industries have grown around these computing platforms – software, hardware, services, training, maintenance, publishing – in no small part because there was a key partner there offering support and leadership.

How do you make money out of a system no one owns? With whom do you form a partnership? How can you be sure, when no one’s in charge, that it’s going to develop in a direction that will suit your business? It’s tricky.

Unless of course you take a leadership role yourself. It seems the best way to make money from Open Source Software like Linux is often to step up and be that key player. This is what Google did with Android, making it the world’s most popular phone OS. Or Red Hat, whose version of Linux is one of the most successful systems in the server sector. Each brought their own business model; Google of course is ultimately selling advertising, Red Hat its expertise and support.

If anyone is going to turn Linux into a household – and an office – name it is surely Canonical. This is the power behind Ubuntu, the most popular and user-friendly desktop version of Linux so far. And their vision doesn’t stop at the desktop – nothing worthy of the name could these days. In recent weeks they’ve launched Ubuntu editions for tablets, phones and TVs. It seems they plan to have devices running their software in every major market sector.

Ubuntu TV – It’s a lot like Windows Media Center except for the giving money to Microsoft part.

It’s an extraordinary ambition, and if they can pull it off then Canonical/Ubuntu will be up there with the big girls, sitting proudly alongside Google, Apple, and Microsoft. But can such a fabulous commercial edifice really be built on open foundations? So much of Linux is being developed by people who work for competing organisations – or who aren’t being paid to do it by anybody.

Indeed community disenchantment may already be starting to show. Ubuntu is no longer flavour of the month. Once hugely popular with the sort of Linux user who doesn’t actually want to reinvent the wheel but just needs something that can be installed and maintained with the minimum of fuss, Ubuntu – and its slightly geekier sisters Kubuntu and Xubuntu – drove all before it. No longer; the flavour now is Mint.

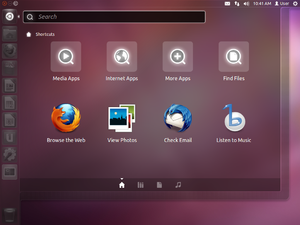

This is a different Linux variant (or ‘distro’, to use the jargon). Indeed it’s very much a variant of Ubuntu, just with Canonical’s more commercial ideas stripped out. (This ‘forking’ is perfectly legal in the OSS world – in fact it’s the whole idea. Ubuntu itself is based on the well-respected Debian distro.) Mint’s popularity though was given a huge boost when Canonical introduced their ironically-named Unity interface.

Some of the resentment of this was silly. Unlike Windows or Mac where the graphical user interface is part and parcel of the system, much of the beauty of Linux is that you can choose – even create – your own. For some however it’s a cause. There have long been two main Linux desktop camps: Gnome and KDE. Ubuntu had been on the Gnome side, so its defection – to a third camp of its own invention yet – was seen by many as betrayal.

More seriously though, Unity is very clearly a touch-orientated interface. As the name suggests, it’s meant to be similar on all types of device. Rather like Windows 8 this makes it less efficient – or put it another way, more annoying – for users stuck with an old-fashioned mouse. And as Linux tablets barely even exist yet, that means pretty much all of them. For the first time, Canonical were allowing their commercial vision to degrade the user experience.

But that was as nothing compared to the next change. A feature of Unity is that you can find a file or application by typing its name in a search box. If what you type isn’t on the computer, the newest version of Ubuntu continues the search on the Web – specifically, to Amazon.com.

“Crochet Patterns not found. Do you want to purchase Crotchless Pants?”

This unasked-for advertising feels a bit like an invasion of privacy. The building of it right into the operating system feels a lot like a kick in the teeth to the non-commercial ethos that engendered Open Source.

Making money in itself is not the problem. Google, Red Hat, even that old devil IBM make a lot of money out of Linux. Where I think Canonical sail close to the wind is in identifying themselves with Linux more closely than any company before. Independent computing creatives will resent it deeply if they come to be perceived as dupes – or worse, minions – of a commercial giant.

There are two questions here really. The first it whether Canonical/Ubuntu can maintain the goodwill of the wider Open Source Software community. The second is whether they can realise their vision without it. Perhaps they can, but I think it would be a minor tragedy if they did.

One thing over which there’s no question though: Open Source Software can continue without Ubuntu.

____________________________________________________________________________

Next time I’ll talk about why you should try Linux for yourself: Because it’s fascinating, informative, educational – and could save you heaps of money.

Related articles

- Maemo – The Open Source OS We Lost (i.doubt.it)

- The Canonical conundrum: Why the Ubuntu hate? (techrepublic.com)

- Why I support Ubuntu (linuxadvocates.com)

- Is Canonical Heading In Apple’s Direction? (linuxadvocates.com)

- Mark Shuttleworth: Canonical leads Ubuntu, not ‘your whims’ (theregister.co.uk)

- Ubuntu Linux developer squabbles go public (zdnet.com)